- For developers

- Industry insights

- People in tech

- Tech trends at BEECODED

- 16 Apr 2025

12 Natural Language Processing techniques used to process text by data scientists

Natural Language Processing techniques are important in artificial intelligence because they facilitate the interaction between machines and human language.

Table of contents

Contributors

Natural Language Processing (NLP) is a sub-domain of artificial intelligence that enables computers to understand and process human language. To make text processing successful, data scientists rely on a variety of techniques, and in this article, we’ll explore 12 of the most commonly used ones. Let’s begin!

Why are natural language processing techniques important?

Natural Language Processing techniques are important in artificial intelligence because they facilitate the interaction between machines and human language.

These techniques involve machine learning algorithms, which subsequently enable text analysis and classification, providing accurate interpretations of linguistic data and improving the efficiency of automated systems.

Key NLP techniques for text processing

The following techniques are part of NLU (Natural Language Understanding). They are the foundation of text processing, as their role is to transform natural language into structured data that can be analyzed.

If you want to learn more about NLP, NLU, and NLG, read our article here.

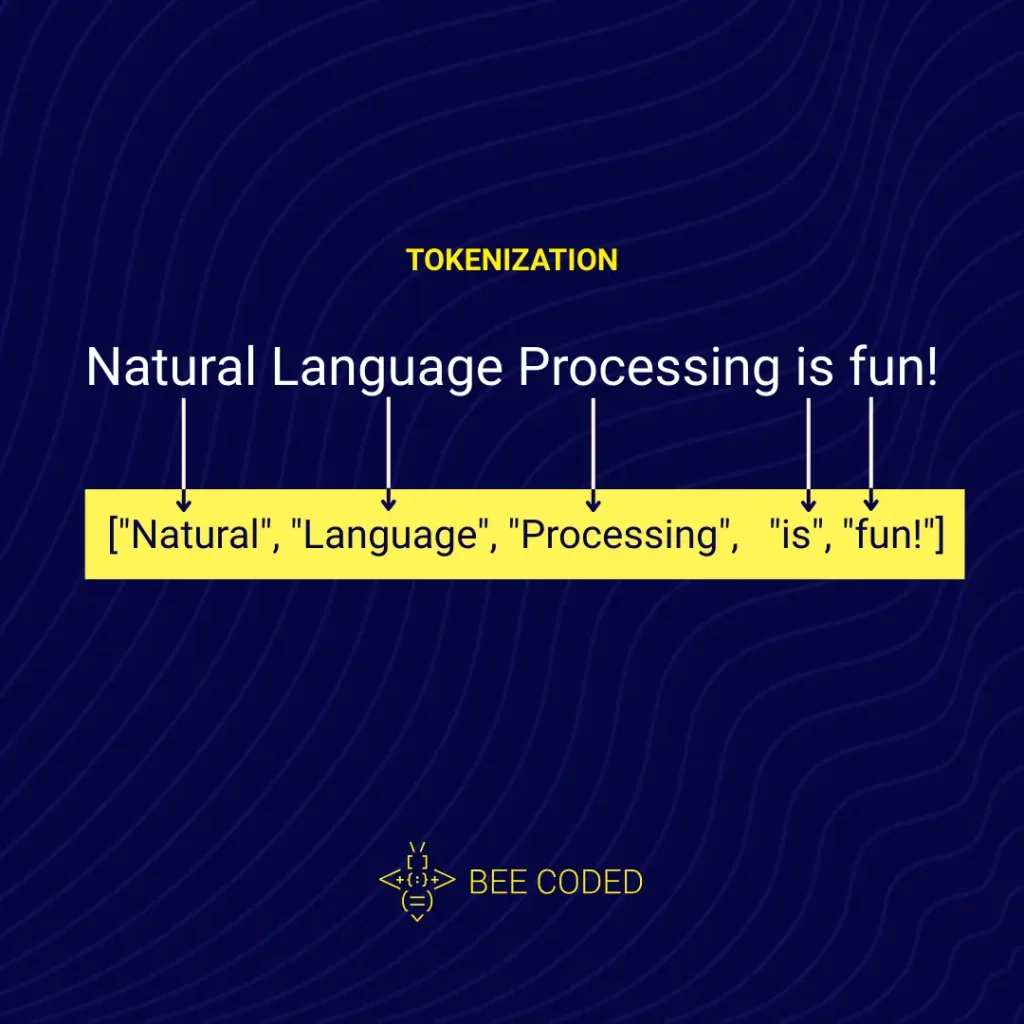

Tokenization

Tokenization divides the text into smaller units called tokens (words, phrases, or characters). This allows the system to analyze each component of the text separately for a better understanding of the context. This division is typically done by a separator, and the one most often used by tokenizers is the blank space.

Here is an example:

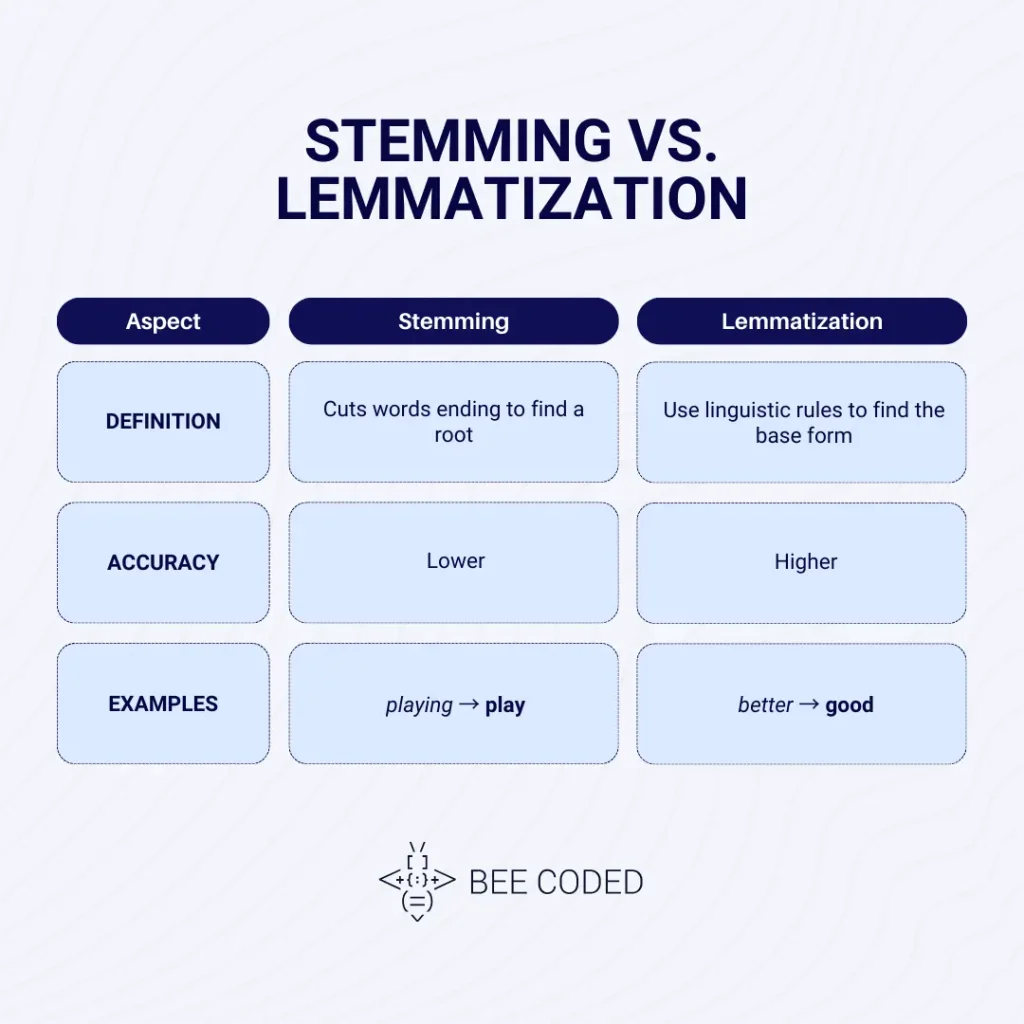

Stemming and Lemmatization

What these two natural language processing techniques have in common is that they both aim to identify the root form of the word. However, the two techniques do this differently, as follows:

- Stemming: this one removes the end of a word (e.g. playing, player, played – all will become play)

- Lemmatization: this is a more advanced technique that uses morphological analysis to determine the base word. While stemming can make errors (e.g. studies can become studi), lemmatization understands the actual root, so a word like better will become good.

Morphological segmentation

Morphological segmentation breaks words into morphemes (the smallest units of language that contain meaning). For example, the word replayed can be split like this:

– re- is a prefix indicating a repeated or reversed action.

– play is the root of the word, meaning “to play”.

– ed is the suffix indicating the past tense of the verb.

Stop Words Removal

This technique involves eliminating words that are repeated and do not add value or meaning. Generally, these words are conjunctions (such as: and, because) and prepositions (like in, on, etc).

Keyword Extraction

This technique identifies the most important terms in a text. To determine the relevance of a word, the following factors are analyzed:

– word frequency

– position of the word in the text

– relationships with other words.

Word Vectorization and Word Embedding

Word vectorization turns words into numbers so that they can be processed by a computer, placing similar words close to each other. For example, words like tree and oak will be closer together than tree and car. Word embedding is a more advanced method that learns the relationships between words based on the context in which they appear.

TF-IDF

TF-IDF (Term Frequency-Inverse Document Frequency) is a statistical method that shows the importance of a word in a document or collection of documents. This technique combines the frequency of a term in a document (TF) with its rarity in the entire collection (IDF).

In SEO, TF-IDF is used to evaluate the relevance of web pages for certain keywords, thus helping to optimize content.

Text Summarization

Text summarization generates condensed versions of documents, retaining the most important information and main ideas. There are two approaches: extractive summarization (selects important sentences from the original text, without changes) and abstract summarization (generates new sentences that capture the essence of the text).

Text classification and Semantic role labeling (SRL)

These techniques provide insight into text structure and meaning:

- Text classification categorizes documents into predefined classes.

- Semantic role labeling identifies the relationships between predicates in sentences and their arguments.

Sentiment analysis

Sentiment analysis determines the emotional tone of a text, usually categorizing it as positive, negative, or neutral. Algorithms can identify emotional nuances and provide valuable insights into people’s attitudes.

Topic modeling

Topic modeling involves identifying the main themes or topics in a collection of documents. It analyzes word distributions to discover groups of terms that frequently occur together and represent coherent topics.

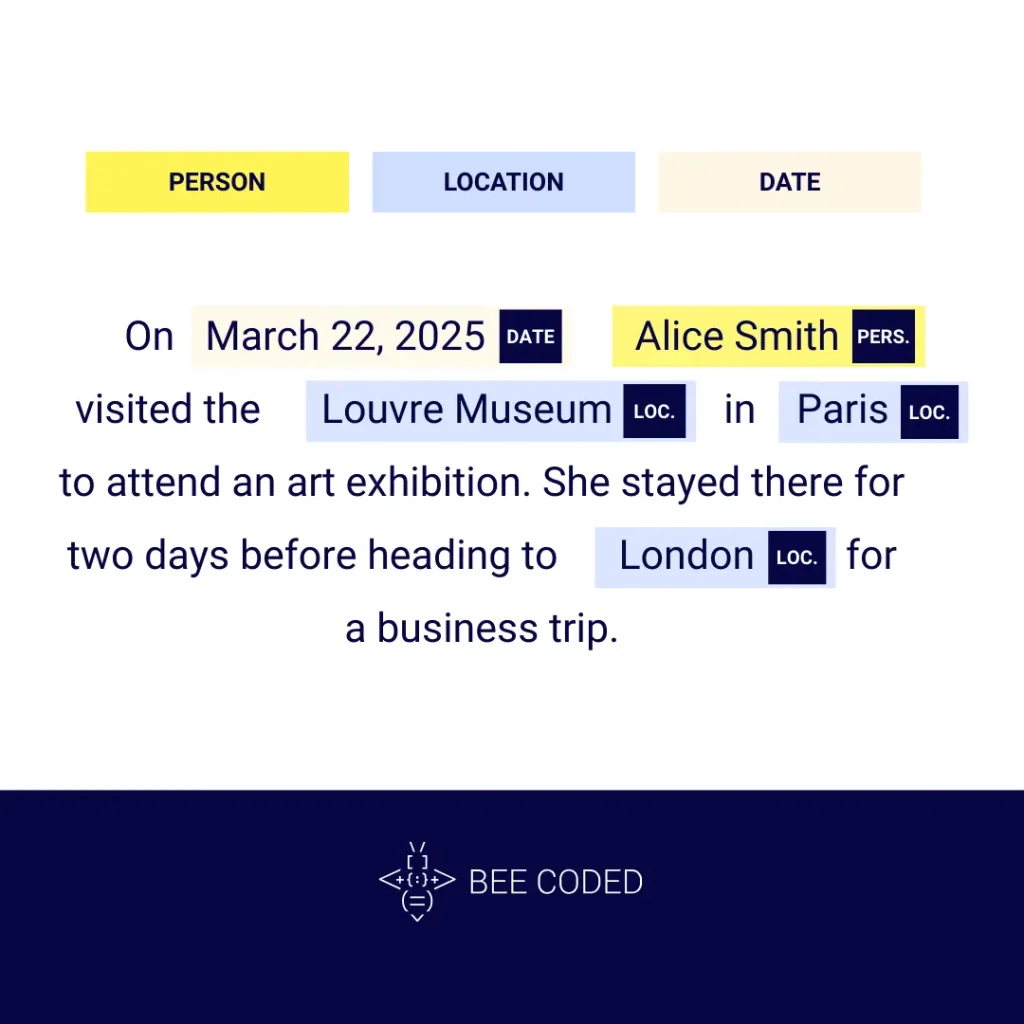

Named entity recognition

Named Entity Recognition (NER) identifies and classifies entities in text into predefined categories such as persons, organizations, locations, etc. This technique helps extract structured information from unstructured text and is used in numerous applications, from search engines to legal or medical document analysis systems.

We develop software powered by NLP techniques to understand, analyze, and communicate effectively with your customers

The Bee Coded Team is here to help you develop advanced software solutions that use NLP techniques to understand, analyze, and communicate effectively with your customers.

Our services include chatbot development, data analysis, software documentation, and SaaS solutions that optimize your processes and give you a real competitive advantage.

What is natural language generation? (NLG)

What is natural language processing (NLP)?

Money in Motion: First App to Integrate Open Banking for All Romanian Banks and Revolut, Partnered with Finqware